In my previous post, I shared how I addressed a high operations-per-second issue in the database by leveraging Redis streams. The solution was working well—until we introduced a new feature.

This feature provided not only the last traded price (LTP) of stocks but also additional information such as market depth. While useful, it significantly increased the volume and frequency of data updates. As a result, Redis streams began consuming excessive amounts of RAM—about 8 GB within just 5 minutes—eventually crashing the system.

Upon investigation, I realized a mistake in my initial implementation: while I was consuming data from the Redis stream, I wasn’t acknowledging or deleting messages. Since Redis stores data directly in memory for high throughput, unprocessed and undeleted messages quickly accumulated, overwhelming the system.

Two Ways to Solve the Problem

To address this issue, there are two viable approaches:

Acknowledge Messages via Redis Consumer Groups and Then Delete

Using consumer groups, you can acknowledge processed messages (XACK) and then remove them from the stream (XDEL). This approach ensures no data is retained unnecessarily.

Delete Messages by Tracking the Last Processed ID

Instead of using consumer groups, you can directly track the last processed message ID and delete it after processing. This method is simpler and works well for single consumers or when scaling is not yet required.

My Solution

For this case, I opted for the second approach—deleting messages by tracking the last processed ID—as the issue primarily occurs during market hours when data volume spikes.

By implementing this strategy, I was able to:

- Reduce RAM usage significantly by ensuring messages were deleted after processing.

- Keep Redis memory footprint stable, even during peak load.

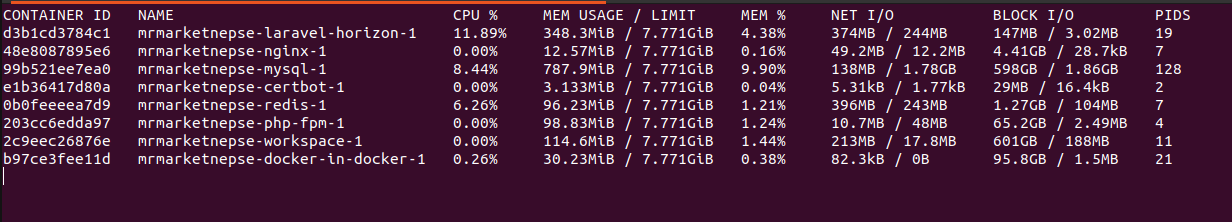

Here is my current snapshot of docker stats

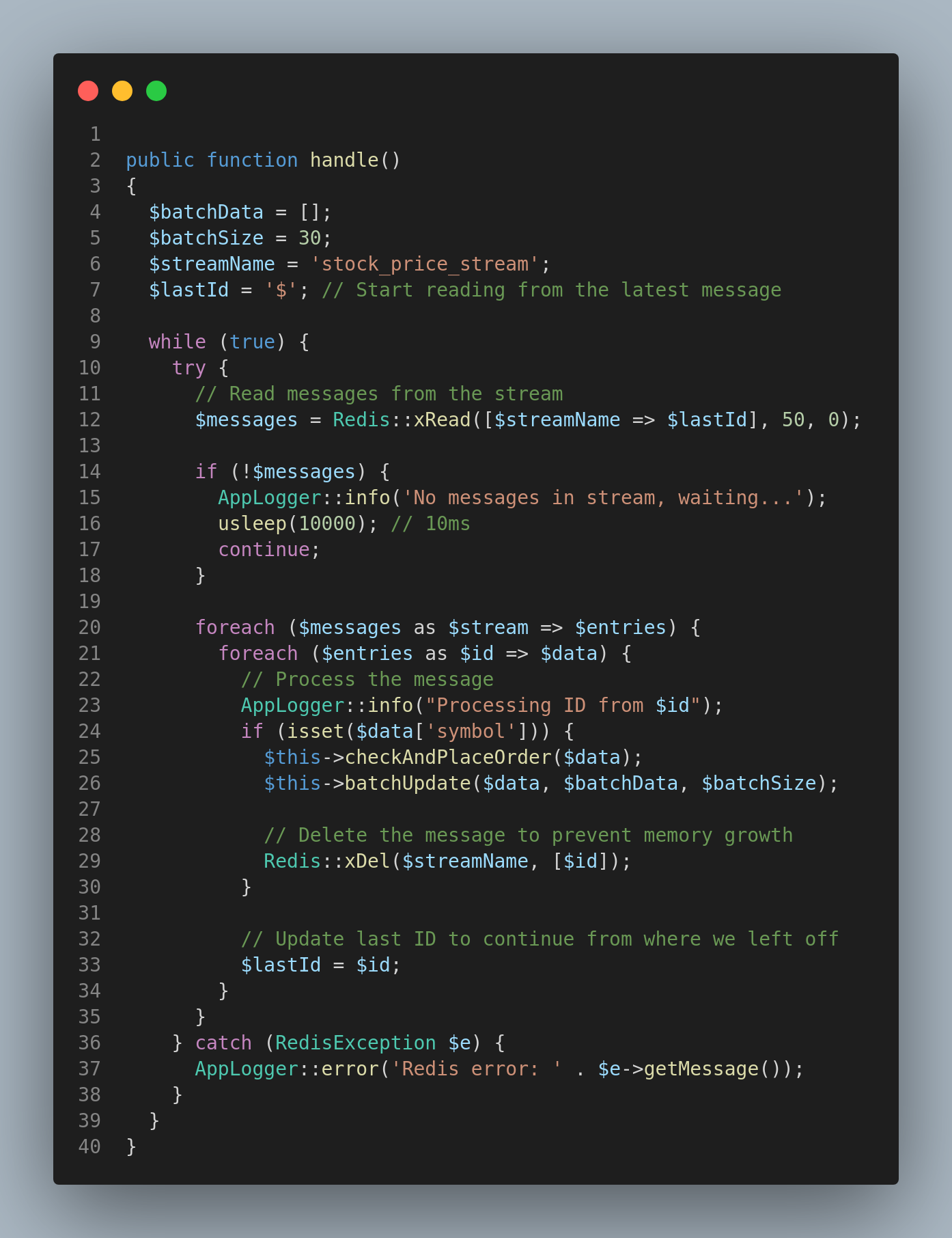

Here is the update code

Reference:

https://stackoverflow.com/a/10008222